- Tags:: 📜Papers , Mlops

- Author:: Shreya Shankar, Rolando Garcia, Joseph M, Aditya G

- Link:: https://drive.google.com/open?id=1yZWpJJWLDsa7q2dEHUf6NjcOZoIA8DL6&authuser=mario.lopezmartinez87%40gmail.com&usp=drive_fs

- Source date:: 2022-09-16

- Finished date:: 2022-10-29

18 MLEs. Not a huge sample :/.

Things are still… bad.

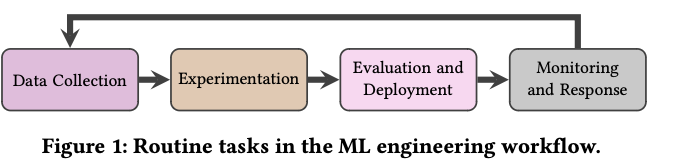

It is widely agreed that MLOps is hard (…) Most successful ML deployments seem to involve a “team of engineers who spend a significant portion of their time on the less glamorous aspects of ML like maintaining and monitoring ML pipelines”.

Although some people don’t agree: No, You Dont Need Mlops.

3 Vs that dictate success:

- Velocity: since ML is highly experimental.

- (As early as possible) Validation: since error handling in ML is expensive (hard to debug).

- Versioning: to manage experiments and as fallbacks for bugs, which cannot be anticipated.

Experimentation

- In ML, collaboration with subject matter experts matter.

- Iteration on the data (e.g., features) results in faster velocity.

It’s gonna be something in our data that we can use to kind of push the boundary.

- Early validation to avoid time waste (i.e., the importance of sandbox environments similar to production).

- Config-driven development, to prevent errors from forgetting important but repetitive stuff (e.g., setting random seeds

”Keeping GPUs Warm” anti-pattern

ML is highly experimental…

A lot of ML is is like: people will claim to have like principled stances on why they did something and why it works. I think you can have intuitions that are use- ful and reasonable for why things should be good, but the most defining characteristic of [my most productive colleague] is that he has the highest pace of experimentation out of anyone. He’s always running experiments, always trying everything. I think this is relatively common—people just try everything and then backfit some nice-sounding explanation for why it works.

pero amb coneixement…

You can be overly concerned with keeping your GPUs warm, [so much] so that you don’t actually think deeply about what the highest value experiment is.

Although computing infrastructure could support many different experiments in parallel, the cognitive load of managing such experiments was too cumbersome for participants (P5, P10, P18, P19). In other words, having high velocity means drowning in a sea of versions of experiments. Rather, participants noted more success when pipelining learnings from one experiment into the next, like a guided search to find the best idea.

Validation

- Dynamic validation datasets: in the same way that in traditional soft eng. you write a regression test after finding a bug, you create validation datsets for subpopulations with failed predictions.

- Progressive rollouts, shadowing…

- Choosing right metrics (though this is hard… Metrics Driven Product Development Is Hard).

Monitoring

- Data distribution shifts have been technically but not operationally studied, ie.., how humans debug such shifts in practice (Model Drifting). People just try to frequently retrain.

- Old models as fallbacks.

- Rule-based layer as ultimate fallback.

- Basic data monitoring is useful, but more advanced monitoring is hard to decide (e.g., thresholds to choose), and cannot prevent incidents:

We don’t find those metrics are useful. I guess, what’s the point in tracking these? Sometimes it’s really to cover my ass. If someone [hypothetically] asked, how come the performance dropped from X to Y, I could go back in the data and say, there’s a slight shift in the user behavior that causes this. So I can do an analysis of trying to convince people what happened, but can I prevent [the problem] from happening? Probably not. Is that useful? Probably not.

And the curse of dimensionality:

If the number of metrics tracked is so large, even with only a handful of columns, the probability that at least one column violates constraints is high!

KISS

- Not much hyperparametrization to prevent overfit.

- Tree-based over deep learning.

- But, deep learning to reduce pipelines:

P16 described training a small number of higher-capacity models rather than a separate model for each target: “There were hundreds of products that [customers] were interested in, so we found it easier to instead train 3 separate classifiers that all shared the same underlying embedding…from a neural network.”

A general trend is to try to move more into the neural network, and to combine models wherever possible so there are fewer bigger models. Then you don’t have these intermediate dependencies that cause drift and performance regressions…you eliminate entire classes of bugs and and issues by consolidating all these different piecemeal stacks.

Other pains

- Diverse data leakages that can be very hard to track (only noticed as lower-than-expected performance).

- Bad code quality: motivated by Jupyter Notebooks and because some good practices are seen as contradictory to the exploratory nature of ML.

- And this scary sentence:

While some types of bugs were discussed by multiple participants (…) the vast majority of bugs described to us in the interviews were seemingly bespoke and not shared among participants.

Which leads to paranoia:

After several iterations of chasing bespoke ML-related bugs in production, ML engineers that we interviewed developed a sense of paranoia while evaluating models offline, possibly as a coping mechanism.

So lots of effort should be put in ease of debugging:

… these one-off bugs from the long tail showed similar symptoms of failure (…) a large discrepancy between offline validation accuracy and production accuracy immediately after deployment (…). The similarity in symptoms highlighted the similarity in methods for isolating bugs; they were almost always some variant of slicing and dicing data for different groups of customers.

…as an organization, you need to make the friction very low for investigating what the data actually looks like, [such as] looking at specific examples.