Metadata

- Author: Douglas W

- Full Title:: How to Measure Anything: Finding the Value of “Intangibles” in Business

- Category:: 🗞️Articles

- Document Tags:: Priority,

- URL:: https://readwise.io/reader/document_raw_content/322687835

- Read date:: 2025-06-17

Highlights

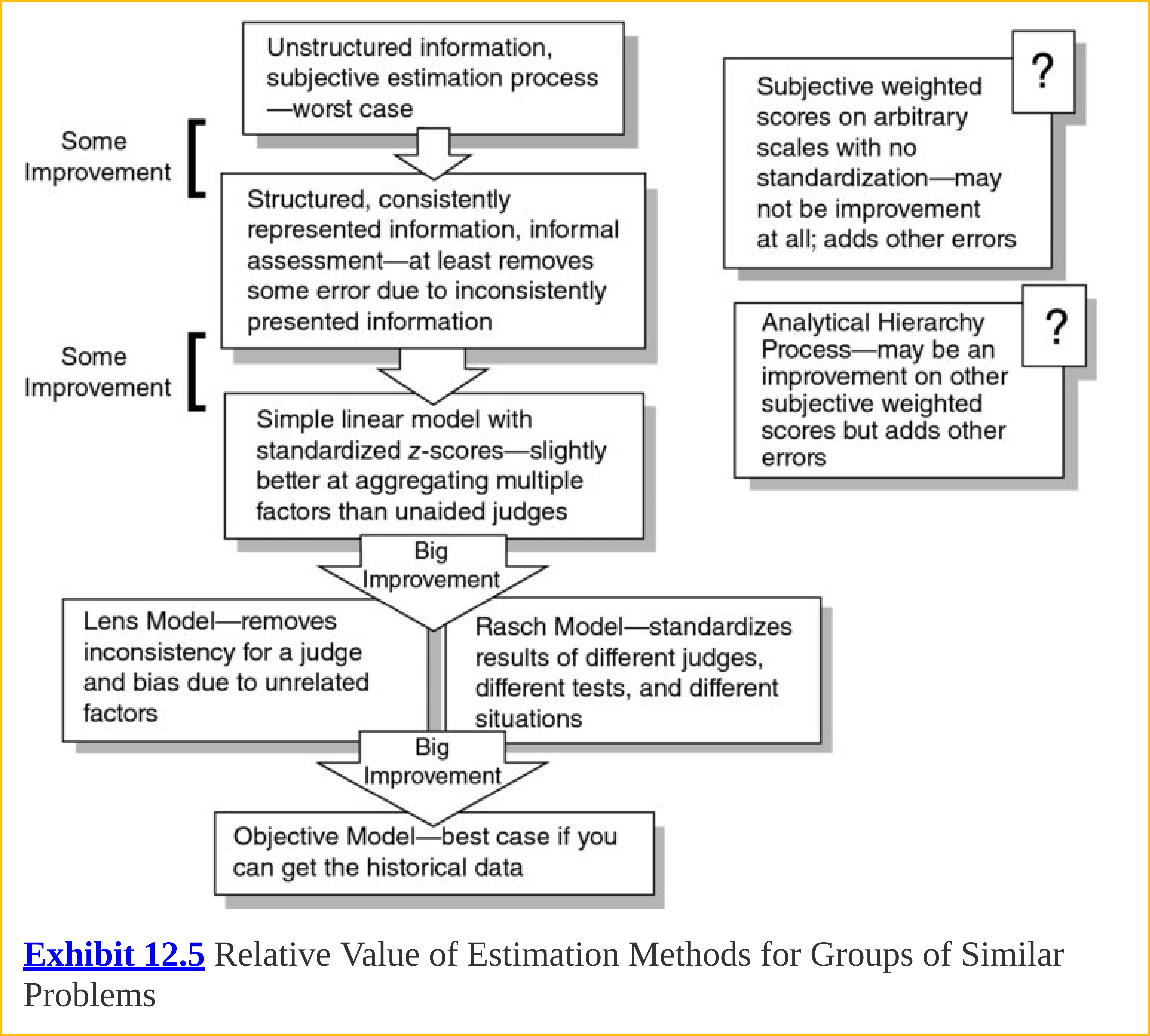

The benefits of modeling decisions quantitatively may not be obvious and may even be controversial to some. I have known managers who simply presume the superiority of their intuition over any quantitative model (this claim, of course, is never itself based on systematically measured outcomes of their decisions). (View Highlight)

So don’t confuse the proposition that anything can be measured with everything should be measured. This book supports the first proposition while the second proposition directly contradicts the economics of measurements made to support decisions. (View Highlight)

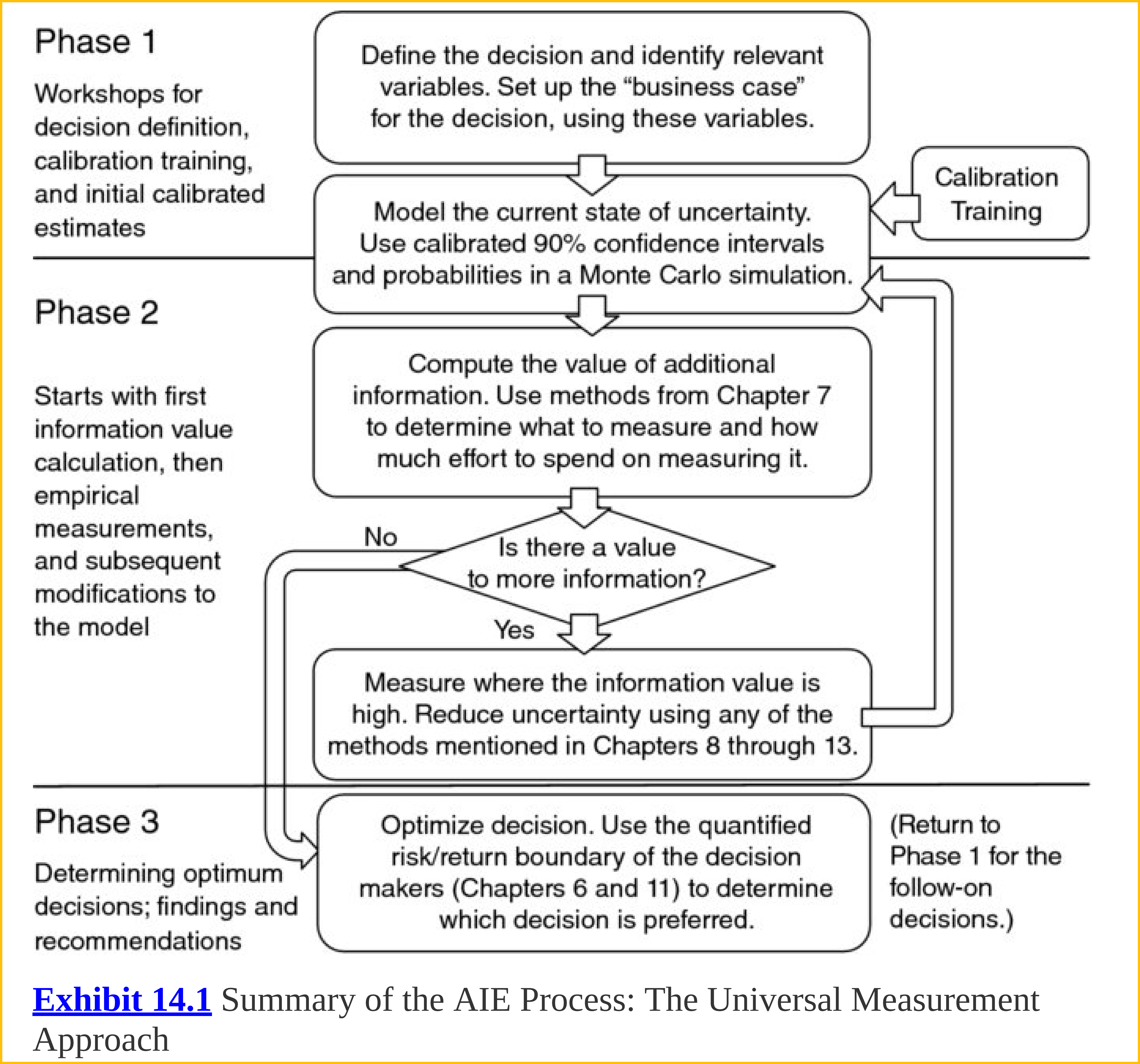

Applied Information Economics: A Universal Approach to Measurement

- Define the decision.

- Determine what you know now.

- Compute the value of additional information. (If none, go to step 5.)

- Measure where information value is high. (Return to steps 2 and 3 until further measurement is not needed.)

- Make a decision and act on it. (Return to step 1 and repeat as each action creates new decisions.) (View Highlight)

Definition of MeasurementMeasurement: A quantitatively expressed reduction of uncertainty based on one or more observations. (View Highlight)

essage of HTMA is still the same as it has been in the earlier two editions. I wrote this book to correct a costly myth that permeates many organizations today: that certain things can’t be measured (View Highlight)

Important factors with names like “improved word-of-mouth advertising,” “reduced strategic risk,” or “premium brand positioning” were being ignored in the evaluation process because they were considered immeasurable. (View Highlight)

New highlights added 2025-06-18

some things seem immeasurable because we simply don’t know what we mean when we first pose the question. In this case, we haven’t unambiguously defined the object of measurement. If someone asks how to measure “strategic alignment” or “flexibility” or “customer satisfaction,” I simply ask: “What do you mean, exactly?” It is interesting how often people further refine their use of the term in a way that almost answers the measurement question by itself. (View Highlight)

“Security” was a vague concept until they decomposed it into what they actually expected to observe. (View Highlight)

Clarification Chain

- If it matters at all, it is detectable/observable.

- If it is detectable, it can be detected as an amount (or range of possible amounts).

- If it can be detected as a range of possible amounts, it can be measured. (View Highlight)

Rule of Five There is a 93.75% chance that the median of a population is between the smallest and largest values in any random sample of five from that population. (View Highlight)

it is important to note that the Rule of Five estimates only the median of a population (View Highlight)

In this last study, Kahneman and Tversky stated: Our thesis is that people have strong intuitions about random sampling; that these intuitions are wrong in fundamental respects; that these intuitions are shared by naive subjects and by trained scientists; and that they are applied with unfortunate consequences in the course of scientific inquiry. —Kahneman, Tversky (View Highlight)

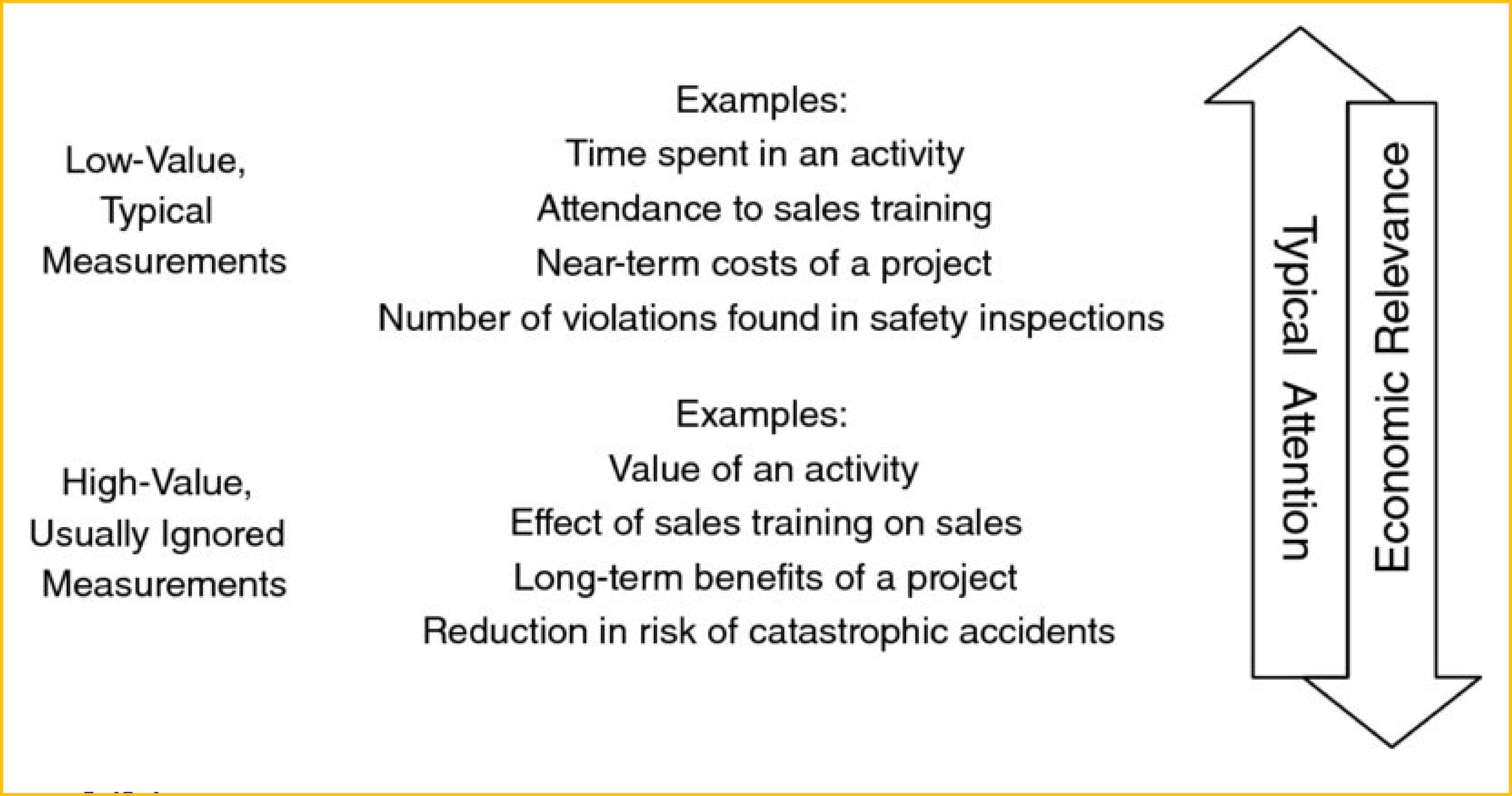

Usually, Only a Few Things Matter—But They Usually Matter a Lot In most business cases, most of the variables have an “information value” at or near zero. But usually at least some variables have an information value that is so high that some deliberate measurement effort is easily justified. (View Highlight)

New highlights added 2025-06-20

Another variation of the economic objection is based on the idea that quantitative models simply don’t improve estimates and decisions. Therefore, no cost of measurement is justified and we should simply rely on the alternative: unaided expert judgment. This is not only a testable claim but, in fact, has been tested with studies that rival the size of the largest clinical drug trials. One pioneer in testing the claims about the performance of experts was the famed psychologist Paul Meehl (1920–2003). Starting with research he conducted as early as the 1950s, he gained notoriety among psychologists by measuring their performance and comparing it to quantitative models. Meehl’s extensive research soon reached outside of psychology and showed that simple statistical models were outperforming subjective expert judgments in almost every area of judgment he investigated including predictions of business failures and the outcomes of sporting events (View Highlight)

Four Useful Measurement Assumptions

- It’s been measured before. 2. You have far more data than you think. 3. You need far less data than you think. 4. Useful, new observations are more accessible than you think. (View Highlight)

Dashboards and Decisions: Do They Go Together? (View Highlight)

It is also routinely a wasted resource. The data on the dashboard was usually not selected with specific decisions in mind based on specific conditions for action. (View Highlight)

New highlights added 2025-06-29

if a measurement matters to you at all, it is because it must inform some decision that is uncertain and has negative consequences if it turns out wrong. (View Highlight)

Risk Paradox If an organization uses quantitative risk analysis at all, it is usually for routine operational decisions. The largest, most risky decisions get the least amount of proper risk analysis. (View Highlight)

The McNamara Fallacy “The first step is to measure whatever can be easily measured. This is okay as far as it goes. The second step is to disregard that which can’t easily be measured or to give it an arbitrary quantitative value. This is artificial and misleading. The third step is to presume that what can’t be measured easily isn’t important. This is blindness. The fourth step is to say that what can’t easily be measured really doesn’t exist. This is suicide.” (View Highlight)

Decompose It Many measurements start by decomposing an uncertain variable into constituent parts to identify directly observable things that are easier to measure. (View Highlight)

As odd as this might seem, populations that have power law distributions literally have no definable mean. Again, in such cases, sampling will eventually produce an outlier that is so different from the rest of the sample, that the estimate of the mean is greatly widened. As more sampling is done, an even more extreme outlier will appear that will widen the 90% CI again, and so on. But this kind of distribution still has characteristics that can be measured in relatively few observations. These methods are known as “nonparametric.” We will show one solution to the problem of nonconverging estimates of means shortly. (View Highlight)

New highlights added 2025-07-04

William Edwards Deming, the great statistician who influenced statistical quality control methods more than any other individual in the twentieth century, said: “Significance or the lack of it provides no degree of belief—high, moderate, or low—about prediction of performance in the future, which is the only reason to carry out the comparison, test, or experiment in the first place (View Highlight)

This is the key of statistical significance for me.

part of the statistical significance calculation—the chance of seeing an observed result assuming it’s a fluke—can be salvaged as a step in the direction toward what the decision maker does need. (View Highlight)

(

( (

( (

(